The emergence of AI agents lets us reimagine the ways we interact with our digital devices. Modem teamed up with Mouthwash Studio to explore the next phase of user interface design, as enabled by AI. General Purpose Interfaces presents a set of principles for designing a single interface that can replace many.

The user interface – the space where humans and computers meet – gives digital technology its presence in the world, making it tangible and accessible. By shaping what we can instruct computers to do, interfaces determine the specific ways that they can be useful to us.

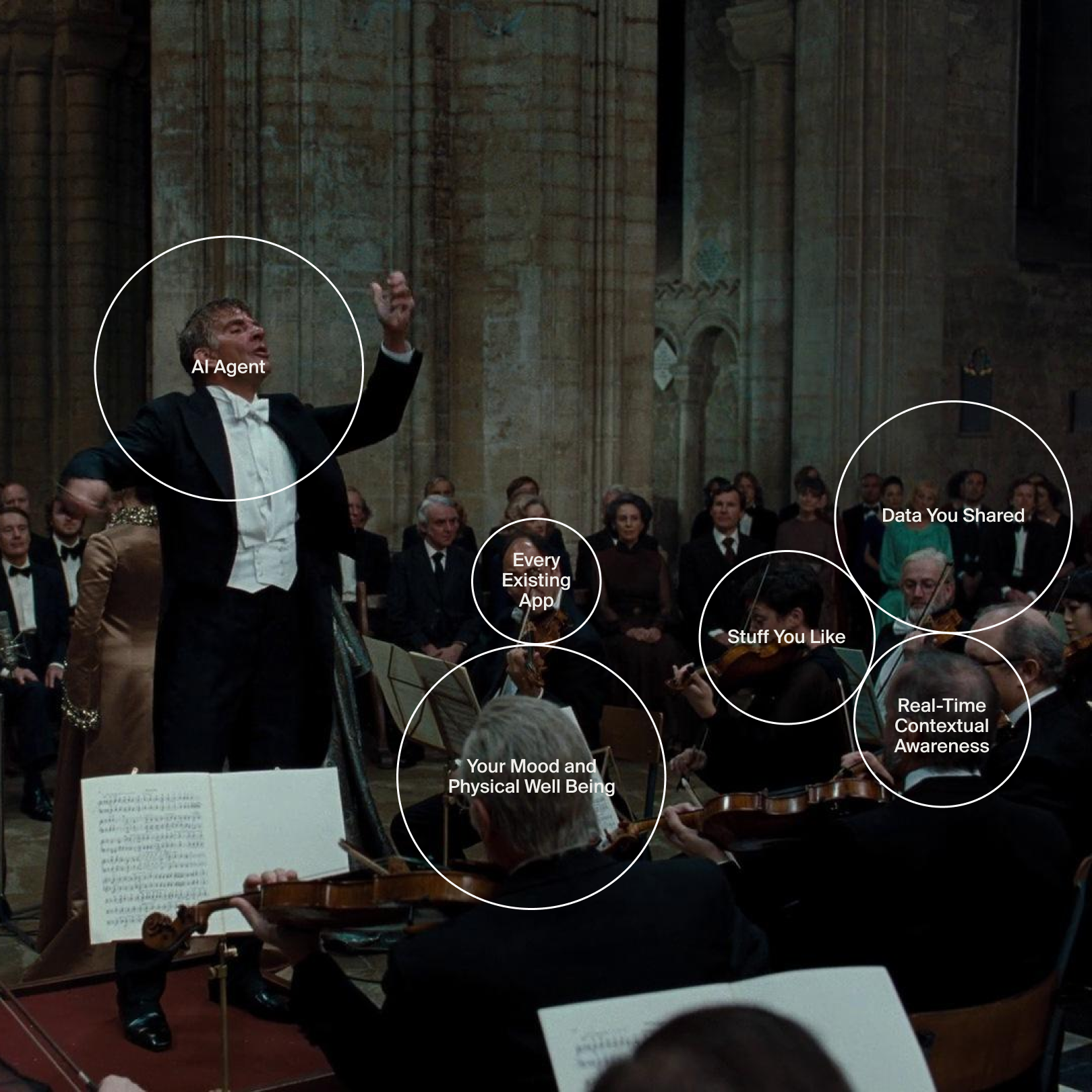

But what if an AI agent was the one using our devices instead of us, acting as an intermediary between the software and the humans it’s ultimately meant for? Large language models (LLMs) have the potential to work with these devices’ existing interfaces, replacing the app-based smartphone paradigm with something simpler and more intuitive.

This might seem to portend the disappearance of human-facing interfaces altogether, but it would actually unify them under a single umbrella – a new type of interface between the user and the AI model, which does everything. “A good tool is an invisible tool,” computer scientist Mark Weiser of Xerox PARC said. “By invisible, I mean that the tool does not intrude on your consciousness; you focus on the task, not the tool.” These AI interfaces will likely fit Weiser’s description, being so seamless and intuitive that we barely recognize them as interfaces at all: General Purpose Interfaces (GPIs).

General Purpose Interfaces accept the widest possible variety of input and produce an equally expansive range of output. By incorporating vision and audio, along with text, these interfaces can better mimic human perception, making them more useful and more usable.

The user interface’s transformation by AI is already well underway, but it is far from complete. Today, the most familiar AI interface is ChatGPT’s text prompt field, which evokes Google search as well as the command-line terminal of early 20th-century computing. Text is an ideal medium for this transition phase: Language, in written and spoken form, is flexible and intuitive, a suitable medium for approaching and exploring an unfamiliar technology.

But these text interfaces are also limited. Too often, they confine our usage of LLMs and other AI products to the patterns established by digital tools we already know. Recent developments like OpenAI’s GPT4-o (“o” stands for “omni”) and Google’s Project Astra improve upon text interfaces by accepting multimodal input – vision and audio in addition to text – which enables them to perceive the world more like humans do, making them more useful and more usable. John Culkin said, “we shape our tools and, thereafter, our tools shape us.” We must consciously shape LLMs – and their interfaces in particular – if they will then be able to shape us in the ways we want. Interfaces do not merely respond to new computing paradigms. They create them.

As an example, what if Apple incorporated an LLM into your iPhone that could monitor everything you do with the device – your browsing history, locations, photo library, messaging, and more? This would enable a variety of new features and services, from simple conveniences like reminder notifications to robust assistance in important domains like health and finance. Such AI enhancement would reframe the iPhone as your digital twin, an always-on companion that learns how to be most helpful by observing how you use the device and what you do in the course of a typical day.

General Purpose Interfaces synthesize information from a disparate array of data sources in their effort to solve problems and answer questions. They draw upon calendars, notes, email and messaging history, and any other available data the user has generated, combining all of this to build a comprehensive understanding of that individual that makes it possible to deliver them more accurate and useful responses.

Apple’s ReALM (Reference Resolution As Language Modeling), as described in a recent research paper, is poised to support the type of interface just described. By converting a device’s on-screen visual content and other contextual information into text before parsing it via LLM along with the commands that the user provides directly, the ReALM system should enable interfaces that can synthesize as much information as possible in order to meet the user’s wide variety of needs.

A fundamental design challenge for GPIs is to balance LLMs’ enormous range of possibilities with the proper constraints to make them approachable and usable. Technology analyst Benedict Evans calls this a “blank canvas” challenge, similar to that of an Excel spreadsheet, which presents an infinite grid where the user can seemingly do anything. ChatGPT’s empty prompt field presents a comparable challenge: One can type anything into the box, but not everything will yield a useful response. GPIs have the opportunity to improve upon this by guiding users to provide inputs that lead to desirable results, and by gathering data that help the model better understand what the user wants.

General Purpose Interfaces respond to observed user behavior, adapting to different social and environmental contexts that create different needs. A certain set of circumstances – a morning commute or a night out with friends – could have implications for every facet of a GPI, from the interface’s layout to feature availability to the nature of its responses, all of which can change in order to best assist the user under their current conditions.

GENERALIZING THE SPECIALIZED

AI’s generalization of the user experience is a reversal of the specialization that mobile apps fostered during the previous era of digital interfaces. The app is the basic unit of the smartphone interface; apps are useful because they constrain and focus behavior, removing choices to foreground the few that are most relevant, thereby streamlining specific actions such as map navigation or checking the weather. Apps once made a rapidly expanding internet more tractable by unbundling its frequently-used elements and placing them on the smartphone’s home screen. But now the app ecosystem has become sprawling itself, with nearly 2 million apps available in Apple’s App Store. GPIs can help to rebundle what was previously unbundled – a single software product capable of performing any tool’s function.

The first wave of AI voice assistants, like Siri and Alexa, represented a superficial attempt at creating a GPI. These assistants only generalized their input, via speech recognition and natural language processing, while offering a narrow range of output that merely replicated the functionality of a few apps. “Alexa and its imitators mostly failed to become much more than voice-activated speakers, clocks and light-switches,” Evans writes. “Though you could ask anything, they could only actually answer 10 or 20 or 50 things, and each of those had to be built one by one, by hand.” In other words, these assistants merely reproduced the smartphone’s app-based interface in a different medium, voice, while disregarding plenty of information that might have helped to make them more useful. These devices still required the user to know which features were available as well as the commands needed to access them – similar to how using a smartphone still requires knowing which apps are available on it and what they can do.

General Purpose Interfaces work with our existing digital infrastructure, acting as a layer on top of our legacy systems and apps rather than replacing them. This interoperability will be essential to GPIs as they evolve. By aligning with a wide range of devices and systems, GPIs will preserve those systems’ existing benefits while making them even more useful by consolidating them under a single interface.

Products like Siri and Alexa suggest that voice alone is not enough to constitute a GPI. There are more criteria than just being intuitive. The expansiveness of LLMs calls for a more sophisticated interface than smartphone apps in a different medium. Technology writer and analyst Ben Thompson posits that being “just an app” won’t suffice “if the full realization of AI’s capabilities depends on fully removing human friction from the process.” Again, multimodal interfaces supported by technology like ReALM, which uses a range of contextual information to better understand what users are asking, are likely to improve upon earlier voice assistants’ limitations. GPIs will have to learn what their users want and then know where to find it, translating their queries as well as their unstated preferences into actionable steps.

FROM APPS TO AGENTS

Existing LLM interfaces like ChatGPT must continue to evolve. “Prompt engineering” resembles the text-based past of digital interfaces more than their future. GPIs will have to be simple and intuitive, of course, but these descriptive terms can lead us astray if we fail to understand the purposes they serve. The desired simplicity arises from the LLM’s ability to streamline the user’s interactions with the variety of existing interfaces – it is one interface that replaces many. “Intuitive,” meanwhile, is just another way of describing the breadth of input types the GPI will accept: voice, text, imagery, and more. This feels intuitive, again, because it’s more similar to how humans perceive the world and interact with one another. OpenAI’s GPT4-o, with its multimodal capabilities, thus offers a glimpse of an early version of a GPI.

General Purpose Interfaces adapt to the user’s fluctuating moods and biological states. They detect problems like anxiety or poor sleep and adjust their UX and output to help alleviate them, in the interest of maximizing holistic well-being. To accomplish this, the GPI will require access to the user’s biometric and health data, combining that with knowledge about their preferences and personality.

A GPI that accepts nearly any input format is still only as good as the model that powers it, however. Not only will these tools rely on natural language processing and similar capabilities to interpret what the user is telling them, but they will need the intelligence to fill in the blanks and figure out what the user really wants, as Apple’s ReALM attempts to do. This requires synthesizing data about the user – their past behavior, their preferences, and their ever-changing circumstances – with more general insight into human behavior. “You’ll simply tell your device, in everyday language, what you want to do,” Bill Gates writes. “And depending on how much information you choose to share with it, the software will be able to respond personally because it will have a rich understanding of your life. In the near future, anyone who’s online will be able to have a personal assistant powered by artificial intelligence that’s far beyond today’s technology.”

A well-designed GPI is essential to realizing the full potential of LLMs. Ideally, these interfaces will enhance our usage of the technology to the point that it effectively becomes invisible, as Mark Weiser said good tools should do. The above principles will help GPIs achieve this outcome. When done well, Venkatesh Rao writes, the “effect of AI is to deepen our engagement with the world, blurring the boundaries between us and our tools, and between our tools and the world.” A GPI, then, isn’t a tool so much as it’s a meta-tool – a single interface for reality itself.