Do hand gestures like the Apple Vision Pro’s double tap represent a giant leap forward in human-computer interaction, or just a new way of doing the same things? Modem collaborated with artist Sanne van den Elzen to explore the capabilities of hand gestures beyond conventional tapping, pinching, and dragging techniques. Coded Gestures reimagines hand-based interactions as the world of spatial computing takes shape.

Until 12,000 years ago, much of Europe was buried in ice. Seeking refuge from the unforgiving climate, groups of humans gathered in caves, some of which were later sealed by sliding mud as the climate warmed. Inside the airtight rooms were pictures painted on the walls: of animals, abstract patterns and stencils of hands exactly like ours.

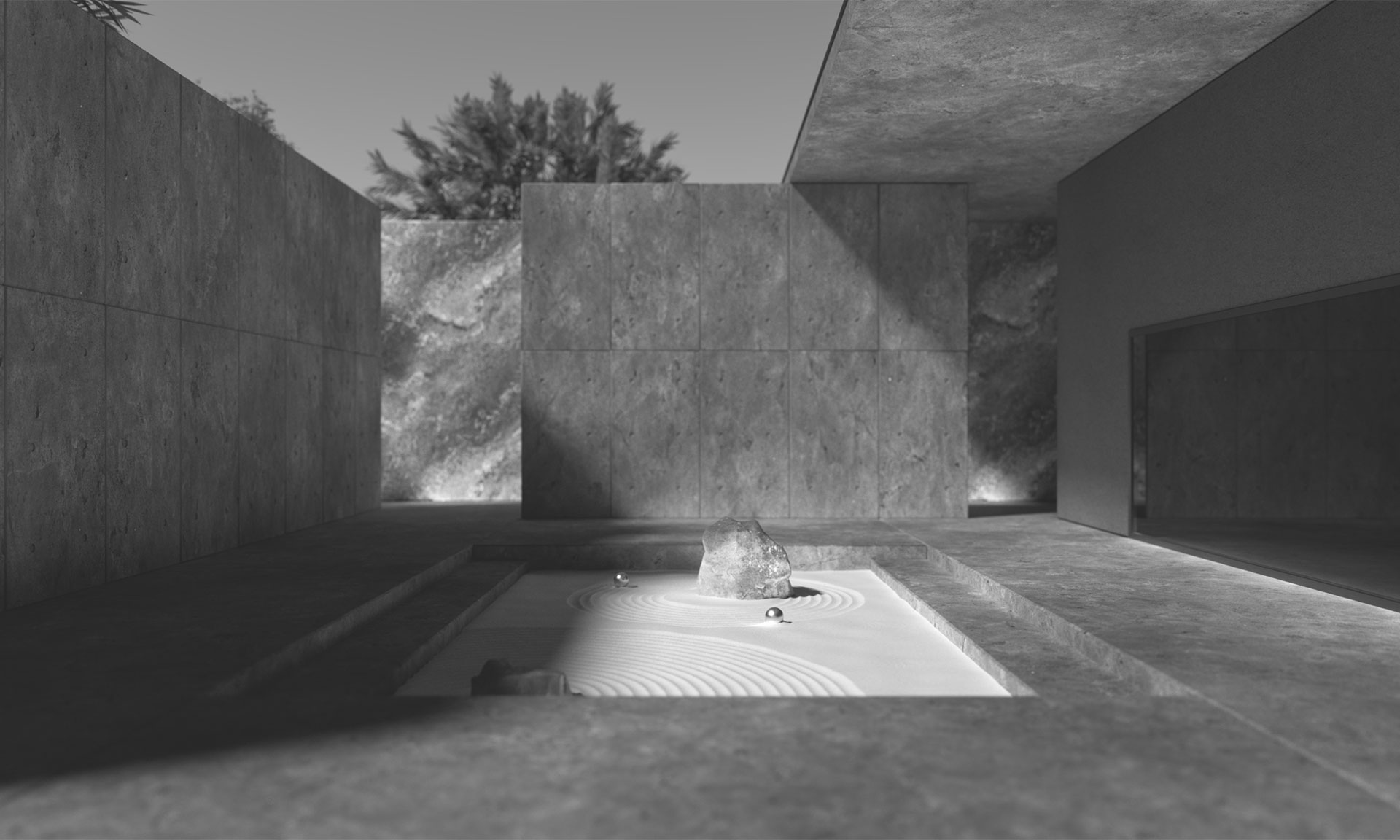

Nobody is quite sure why these stencils were made, but the practice was widespread. They have been found in Europe, Eastern Asia, South America and Australia, reaching back at least 50,000 years. Were they artists’ signatures, claiming authorship over the paintings of now extinct species like the banteng or mastodon? Were they a record of the creator’s existence – like fingerprints or selfies? Perhaps they were just hands for hands’ sake: a tribute to the technology with which we had already begun to transform the planet.

A SHOW OF HANDS

It’s quite accurate to call the hand a technology. Our hands are the result of what cognitive scientist Rafael Núñez calls “biological enculturation,” whereby our bodies gradually transform in response to cultural habits. Over many generations, our hands developed to manipulate the primitive tools that homo habilis began using more than two million years ago. Our shoulder anatomy, too, was shaped by launching projectiles hard and fast while hunting. Our jaws shrank because we learned to soften foods by cooking them, creating more room in the skull for an expanding brain with which to further develop our techno-cultural niche.

There’s a well-worn quote – often attributed to Marshall McLuhan – that reminds us how “we shape our tools and thereafter our tools shape us.” (The line was actually by Father John Culkin, a communications professor and priest, in an article about McLuhan.) It could be a guiding principle for platform design, interaction design and engineering – yet few likely realize just how personal the world-shaping power of tools can be for those who wield them.

During their September 2023 event, Apple COO Jeff Williams introduced the double tap gesture as a way to interact with the company’s Series 9 watch: tapping the index finger and thumb twice in quick succession to silence an alarm, answer a call or pause a song. In essence, it’s the click upgraded for mixed reality, which Apple refers to as spatial computing, sensed using an accelerometer, gyroscope and heart monitor in the watch.

Those paying close attention would have recognized the gesture from the Vision Pro headset announcement, where it joins a series of other movements – pinches, drags, and rotations – to manipulate objects in 3D space. The introduction of hand gestures in an existing product line will give Apple plenty of data about how people might adapt to the change. It gives developers advanced warning so they can think how their software might be manually operated (from the Latin manus, “hand”). It will also give us a foretaste of offices and streets and homes filled with people gesticulating wildly as they go about their day. But is all this really an upgrade?

A ROLLS-ROYCE WITHOUT AN ENGINE

Apple’s ARKit software tools enable the creation of custom gestures for its devices. Yet the company encourages developers to “lean on familiar patterns” so users can “really focus on the experience instead of having to think about how to perform the interaction.” The rationale for this is obvious: the company is seeking to ease the transition into AR and importing legacy UX interactions (as well as virtual renditions of flat screens) is the safest way to do that. Yet after nearly two decades of touchscreen interaction – the liberation of hands from mice and keyboards ought to make us wonder what else might be possible.

In his book Hands (1980), the primatologist and former orthopedic surgeon John Napier comments on our love of dexterity in animals – the elephant lassoing an apple with its trunk or a chimpanzee brandishing a grape – but often fail to appreciate our own. Hands take immensely detailed readings of the physical environment. They help us to evaluate the unspoken and unseen: the volume of liquid remaining in a cup, for example, or the degree of intimacy between two people we just met. When we pick up a stick at one end, we feel the whole object. When we run it across a surface, we feel that surface. If we use it to hit something, it becomes an embodied part of us.

Hands are not only instruments for interfacing with the material world but are key to social and even cognitive activity. In her book The Extended Mind (2021), Annie Murphy Paul notes how gestures help us pin down vague thoughts, offering a “proprioceptive hook” that distributes cognitive load and assists memory. Gestures accompany everyday speech. They replace it when we lack a shared language, or when speech becomes inaudible or dangerous.

Gestures enable us to speak in silence, to carry out specific tasks like aircraft marshaling, market trading, or stalking animals in the bush. In the Buddhist, Hindu and Jain traditions, “mudras” are ritual gestures that channel specific elements and seek out physical effects. Then there are the secular symbols: a finger to the lips for quiet or a squiggle to request the bill. And yet most modern devices reduce the vast repertoire of possible gestures to a handful of single finger or multi-touch inputs on flat screens. “The disenfranchised hand,” wrote Napier, “is like a beautiful Rolls-Royce that has no engine under the bonnet – elegant but useless.”

TOUCH HUNGER

The first touchscreen was developed by British engineer Eric Johnson of the Royal Radar Establishment in 1965 in an attempt to speed up interactions between air traffic controllers and their computers. From there, the technology bifurcated into “capacitive” touchscreens, which use the conductive properties of a finger or stylus, and “resistive” ones, which rely on pressure to join two surface materials in order to triangulate a point.

Resistive touchscreens are often seen in ATMs, point-of-sale devices, in hospitals and heavy industry. They’re robust and cheap to manufacture. Back in 2007, 93 percent of all touchscreens produced globally were resistive. By 2013, after the arrival of the iPhone, just 3 percent were resistive while 96 percent were capacitive. In either case, both enable what interface designer Bret Victor calls “pictures under glass,” reducing the affordances of hands to a single fundamental gesture: “sliding a finger along a flat surface.”

Project Soli from Google’s Advanced Technologies and Research Projects (ATAP) was originally introduced in 2015. It uses a highly sensitive, low-power radar transceiver to exit the imperium of touchscreens altogether in pursuit of “ambient, socially intelligent devices” that operate with “social grace,” inspired by “how people interact with one another.”

Today the ATAP team is using machine learning to observe how people interact with one another and with their devices. This is laudable – though eight years later Soli has still yet to find its mass market unlock. The team suggests the hand itself could be its own contact surface: moving a volume dial by rubbing thumb across palm, for example, taking advantage of the 17,000 touch receptors on the palm to create a smooth gradient rather than a binary click.

Professor Tiffany Field of the University of Miami coined the phrase “touch hunger” to account for the lack of tactility in modern life. This makes sense. Large regions of the brain are dedicated to processing contact with the skin – just think of the cortical homunculus and its enormous hands – and a world of glass surfaces does little to satisfy the cravings of our in-built somatosensory hardware.

In 2021, Meta’s Reality Labs presented a Neural Wristband that uses electromyography to sense motor nerve signals as they pass down the arm towards the hand. As well as enabling communications triggered by tiny finger movements, they argue that the wrist is a prime site for haptic feedback. In the prototype demonstration, a woman pulls back on an AR bow and arrow. The wristband tightens as tension in the virtual bowstring increases.

According to Sean Keller, VP of research science at Reality Labs, AR and VR will catalyze a shift from personal to personalized computing. His colleague, the neuroscientist and programmer Thomas Reardon, cites the possibility of designing one’s own keyboard simply by typing on any surface. This is a compelling proposition. Were the system able to better model the properties of the object, as well as the hand movements upon it, we might begin to approach something like augmented touch with possibilities too precious to give up.

AUGMENTED TOUCH

A future interface design that orients itself towards augmented touch would strive to master everything that touch makes possible in baseline reality with the possibilities of the virtual in addition. What this means in practice would be limited only by our biological hardware and access to the data and devices that will stimulate it. Perhaps it means an expecting mother can use her hands to touch the baby in her womb, shoppers can grasp the clothing, furniture or fresh produce they clutch at virtual checkouts, or space-obsessed children can access rover data allowing them to build sandcastles from regolith on the surface of Mars.

This in turn could open the way to everyday human-machine interactions where construction workers move bridge parts by arching their hands like the conductor of an orchestra, or scientists manipulate cell walls, join molecules and fuse nanofibres by using the evolved dexterity of their hands rather than immediately passing off the job to a machine.

“The goal of neural interfaces is to upset this long history of human-computer interaction and start to make it so that humans now have more control over machines than they have over us,” says Reality Labs’ Reardon. One wonders if this is a false division. Mechanical mice, keyboards and finger swipes may have been necessary at the dawn of consumer tech. But the recent reintroduction of hands is inching us closer to where we started: an evolutionary vantage point from which we should expand our capabilities by using tools (just like we always did).

NEWSLETTER

Subscribe to the Modem newsletter to receive early access to our latest research papers exploring new and emerging futures.